tecznotes

Michal Migurski's notebook, listening post, and soapbox. Subscribe to ![]() this blog.

Check out the rest of my site as well.

this blog.

Check out the rest of my site as well.

Dec 31, 2007 1:36am

jaron lanier

What's wrong with Jaron Lanier?

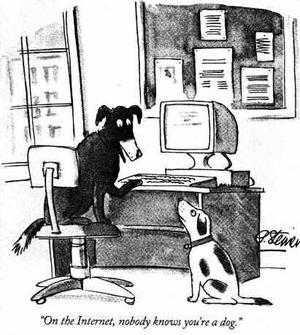

Last month, he wrote a NYTimes opinion piece, Pay Me For My Content, arguing that it's up to "us" (geeks) to figure out how to make the Internet less free so that writers can get paid:

To help writers and artists earn a living online, software engineers and Internet evangelists need to exercise the power they hold as designers. Information is free on the Internet because we created the system to be that way. ... We owe it to ourselves and to our creative friends to acknowledge the negative results of our old idealism. We need to grow up.

Now, he has a piece in Discover, Long Live Closed-Source Software!, arguing that open source software unsuitable for "innovation":

Open wisdom-of-crowds software movements have become influential, but they haven't promoted the kind of radical creativity I love most in computer science. If anything, they've been hindrances. ... Linux is a superbly polished copy of an antique, shinier than the original, perhaps, but still defined by it.

Why did the adored iPhone come out of what many regard as the most closed, tyrannically managed software-development shop on Earth? An honest empiricist must conclude that while the open approach has been able to create lovely, polished copies, it hasn't been so good at creating notable originals.

This goes on until Lanier mires himself in a loopy biological metaphor about cellular membranes and finally falls back on the twin Old Faithfuls, "I get so much heat for my dangerous, minority opinion" and "I know Richard Stallman".

While I have my agreements and disagreements with both pieces, I'm trying to figure out what Jaron Lanier is trying to do by publishing these views as he wears his Linden Labs hat. I can see how both lines of argument support Linden's business model: reinventing scarcity so their users' creations can command monetary value (horrifying example: unicorn babies via interspecies sex). What else is going on here? Can anyone familiar with Lanier's history clue me in on where his arguments come from, and where they're going?

Sepcifically, where ever did the NYTimes opinion originate? There have been plenty of examples of closed networks in the past, e.g. AOL, MSN, Prodigy, Compuserv, all of which have been crushed by the internet. In that sense, Free has already trumped Closed, and there's nothing us geeks "need" to do but enjoy it. In another sense, there are plenty of excellent examples of thriving markets, stores, and closed communities built on the internet and the web that implement the gettin'-paid features Lanier wants to see. What does Lanier want to see done differently? Credit card authorization built into TCP/IP?

Dec 17, 2007 8:16am

open gov

Last week, Carl Malamud had Shawn and I as guests at his Open Government Working Group meeting, held in O'Reilly's Sebastopol offices.

Ethan Zuckerman has one of the more comprehensive write-ups of this excellent event, as do John Geraci and Nat Torkington. Joseph Hall recorded Shawn's O.G. gang sign for posterity.

All I've got is this bag of links to mentioned projects and people, and the lasting conviction that Tom Steinberg's guruhood derives from him having tried everything.

Dec 3, 2007 7:42am

alaskan urbanism

For comparison:

"The last bit of Earth unclaimed by any nation-state was eaten up in 1899. Ours is the first century without terra incognita, without a frontier. Nationality is the highest principle of world governance--not one speck of rock in the South Seas can be left open, not one remote valley, not even the Moon and planets. This is the apotheosis of "territorial gangsterism." Not one square inch of Earth goes unpoliced or untaxed"

- Hakim Bey, found at Temporary Personal Urbanisms via Adam.

We stole countries! That's how you build an empire. We stole countries with the cunning use of flags! Sail halfway around the world, stick a flag in. "I claim India for Britain." And they're going, "You can't claim us. We live here! There's five hundred million of us." "Do you have a flag?" "We don't need a flag, this is our country you bastard!"

- Eddie Izzard, rendered in Lego.

Imagine living hundreds of miles from your nearest neighbor, having groceries and mail delivered by airplane a few times each year, and battling long, harsh winters with temperatures that plummet to -51 C. Such are the living conditions chosen by the hearty few who inhabit America's last frontier: the Alaskan bush - a spectacular land of rivers and mountains so remote that many remain unnamed. Through the cameras of National Geographic, you'll enter the lives of four families who have turned their backs on civilization to fulfill their dreams of living off the land. Join these modern-day pioneers as they face the daily challenges of survival - hunting for food, staying warm, and fending off grizzlies. You'll experience America's pioneering spirit through these remarkable people who are BRAVING ALASKA!

- Astonishing National Geographic documentary, very much worthwhile. Watch it, buy it.

Nov 24, 2007 11:47pm

univega

Last July, I found a crusty old 80's Univega road bike left out for the trash collectors across the street from our apartment. I'd been considering a project bike of some kind ever since getting and loving the IRO, and this seemed like a low-cost way to noodle with a new bike without breaking the bank. Today, after four months, I bent one of the handlebars back into shape, put on some symbolic tape, and finally called it done:

I've actually been using this thing as my primary bike for a few weeks now, mostly to work and on errands, but also the occasional fun ride.

My original idea was that it would be a utility/beater, so I made sure to get a rack and basket for the back. Doing complete food shopping runs and being able to haul a reasonable amount of stuff is liberating... faster than walking, no time wasted with parking, and loaded with endorphin-producing self-righteousness. I also took the whole thing to Adam at Pacific and asked him to check my work for me... there were a few loose or ungreased bits, and he rebuilt the bottom bracket.

I ended up keeping a lot of the original parts that weren't rusted into lumps. The front brake with giant, goofy lever had to stay.

The original wheels and cranks were garbage. I got these 27" Weinmann rims / Formula hubs from E-bay, and the Sugino cranks from Craigslist.

The first loose build had the cranks on the wrong side. Oops.

Originally I was expecting to keep just the frame. I had also planned to paint it, but I wasn't sure if it was going to be worth keeping. It's turned out to be as good a ride as my track bike, possibly even more comfortable due to the loopy, springy steel frame. I may paint it yet, though I'm certainly not going to drop $200+ to get it done professionally. Ultimately, it'd be nice for it to look like Scott Meyer's Univega on FGG.

Here's how I first found it:

Nov 20, 2007 9:03am

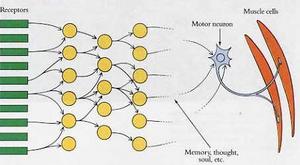

blog all dog-eared pages: science in action

Brian Marick's recent series on Actor-Network Theory (parts I, II, III, and IV) reminded me to dig up Science In Action for a fresh books post. I read this book a little over a year ago, after becoming interested in the philosophy of science through Karl Popper, and further diving has since led me to Paul Feyerabend, Thomas Kuhn, and Tracy Kidder's Soul Of A New Machine.

There are two big ideas I took away from this 20-year-old Bruno Latour book about the workings of science and technology. One is a figure-ground reversal akin to the NRA's famous slogan, "guns don't kill people, people kill people". The second is a description of the social conditions that make science possible. Latour frames his argument by introducing the concept of technoscience, his term for the kind of scientific inquiry that needs people, work, equipment, and funding - national laboratories, cancer research, particle physics, the sort of projects that Russell Davies recently described as thirteen smart guy problems in a post about Malcolm Gladwell.

The figure-ground reversal substitutes the common diffusion model with Latour's translation model. The former explains progress in terms of "ideas moving through society", while the latter places the people who act upon ideas in the foreground. People with desires and beliefs occupy the active role in Latour's world, moving ideas forward if they feel their own plans and agendas to be supported. Latour asserts that the diffusion model provides an inaccurate picture of technoscience, because it fails to account for the conscious agency of the technoscientists themselves. The book is well-stocked with examples of scientific and technological progress explained in terms of the people who linked their fates to a particular theory or invention: General Data's Tom West in Soul Of A New Machine, Rudolf Diesel's engine (and the MAN engineers and mechanics who eventually made it work), and the hypothetical "boss" of a biology laboratory.

Latour also shows how a social division between an inside (within the lab) and an outside (out in the world) make scientific and technical work possible. It is necessary to follow both to make any sense of "where" science happens: the outside supports the inside, allowing it to specialize, channeling funding, equipment, and personnel into the lab by enlisting and guiding the self-interest of universities, governments, and foundations towards to the interests of the lab itself. At the same time, the inside justifies the outside, producing results (process, technology) that fulfills the interests of those outside. On the boundary between the inside and outside sits the boss-figure, the scientist or engineer who motivates the lab and fights for its continued survival. "Firefighter up, cheerleader down" as my friend and first boss Darren used to say.

Science In Action has been quite a ride, and I've tried to apply its observations to my own company in a number of ways. For one, it has been instructive to think in terms of inside/outside with our activities, switching between "doing the work" and "talking about the work", having people specialize in one but not the other, and recognizing the importance of aligning the broader world's interests with our own. The time this becomes unusually rewarding are probably more frequent than we rightfully deserve: months of digging deep into nothing by maps, followed by a string of projects focused on images or time. It means we can juggle a lot of balls in the air without everyone fragmenting off into their own private corners. I've also recently sat in on the standards process behind OAuth, and have tried to judge it by Latour's translation model to see where good ideas were being moved about through conscious alignment of many groups' self-interests. I found this quite instructive.

Latour also has a way of describing the idea of a black box that resonates deeply. When I think back to my first visits to San Francisco (upper Haight when in High School, Bahia Cabana, the Mission, and downtown early in college), the city had not yet solidified in my mind, and I encountered each neighborhood on its own terms without a clear understand of how they fit together. The process by which novelty is transformed into familiarity and later background marks the passage of time. Sometimes it'd be nice to unlearn things at will.

Pages 91-92, on reification:

All biologists now take 'protein' for an object; they do not remember the time, in the 1920s, when protein was a whitish stuff that was separated by a new ultracentrifuge in Svedberg's laboratory. At the time protein was nothing but the action of differentiating cell contents by a centrifuge. Routine use however transforms the naming of an actant after what it does into a common name. This process is not mysterious or special to science. It is the same with the can opener we routinely use in our kitchen. We consider the opener and the skill to handle it as one black box which means that it is unproblematic and does not require planning and attention. We forget the many trials we had to go through (blood, scars, spilled beans and ravioli, shouting parent) before we handled it properly, anticipated the weight of the can, the reactions of the opener, the resistance of the tin.

Page 107, on phasing:

If the notion of discrete phases is useless, so, too, is that of trajectory. It does not describe anything since it is again one of the problems to be solved. Diesel indeed claimed that there was one trajectory which links his seminal patent to real engines. This is the only way for his patents to be 'seminal'. But this was disputed by hundreds of engineers claiming that the engine's ancestry was different. Anyway, if Diesel was so sure of his offspring, then why not call it a Carnot engine since it is from Carnot that he took the original idea? But since the original patent never worked, why not call it a MAN engine, or, a constant pressure air injection engine? We see that talking in phases in a trajectory is like taking slices from a pate made from hundreds of morsels of meat. Although it might be palatable, it has no relation whatsoever to the natural joints of the animal.

Page 137, on cameras and black boxes:

Let us remember Eastman's Kodak camera. It was simpler to operate than anything else before. 'Push the button, we'll do the rest,' they said. But they had to do the rest, and that was quite a lot. The simplification of the camera that made it possible to interest everyone in its dissemination in millions of copies had to be obtained by the extension and complication of Eastman's commercial network. When you push the button you do not see the salesmen and the machines that make long strips of celluloid films and the troubleshooters that make the coating stick properly at last; you do not see them, but they have to be there none the less. If they are not, you push the button and nothing happens. ... If we have understood this, then we may draw the conclusions from the two first parts of this chapter: the black box moves in space and becomes durable in time only through the actions of many people; if there is no one to take it up, it stops and falls apart however many people may have taken it up for however long before. But the type, number, and qualifications of the people in the chain will be modified: inventors like Diesel or Eastman, engineers, mechanics, salesmen, and maybe 'ignorant customers' in the end. To sum up, there are always people moving the objects along but they are not the same people all along.

Page 141, on diffusion vs. translation and why society is a fiction:

Among all the features that differ in the two models, one is especially important, that is society. In the diffusion model society is made up of groups which have interests; these groups resist, accept, or ignore both facts and machines, which have their own inertia. In consequence we have science and technics on the one hand, and a society on the other. In the translation model, however, no such distinction exists since there are only heterogeneous chains of associations that, from time to time, create obligatory passage points. Let us go further: belief in the existence of a society separated from technoscience is an outcome of the diffusion model. Once facts and machines have been endowed with their own inertia, and once the collective action of human and non-human actors tied together has been forgotten or pushed aside, then you have to make up a society to explain why facts and machines do not spread.

Page 152, on specialization and isolation:

...an isolated specialist is a contradiction in terms. Either you are isolated and very quickly stop being a specialist, or you remain a specialist but this means you are not isolated. Other, who are as specialized as you, are trying out your material so fiercely that they may push the proof race to a point where are of your resources are barely enough to win the encounter. A specialist is a counter-specialist in the same way as a technical article is a counter-articles (Chapter 1) or a laboratory is a counter-laboratory (Chapter 2).

Page 155, defining outside and inside:

This case shows how important it is to decide who are the people to study. Depending on which scientist is followed, completely different pictures of technoscience will emerge. Simply shadowing West or the boss will offer a businessman's view of science (mixture of politics, negotiation of contracts, public relations); shadowing the microkids or the collaborators will provide the classic view of hard-working white-coated scientists wrapped up in their experiments. In the first case we would be constantly moving outside the laboratory; in the second, we would stay deep inside the laboratory. Who is really doing research? Where is the research really done?

Page 156, more on inside and outside:

The first lesson to be drawn from these examples is rather innocuous: technoscience has an inside because it has an outside. There is a positive feedback loop in this innocuous definition: the bigger, the harder, the purer science is inside, the further outside other scientists have to go. It is because of this feedback that, if you get inside a laboratory, you see no public relations, no politics, no ethical problems, no class struggle, no lawyers; you see science isolated from society. But this isolation exists only in so far as other scientists are constantly busy recruiting investors, interesting and convincing people.

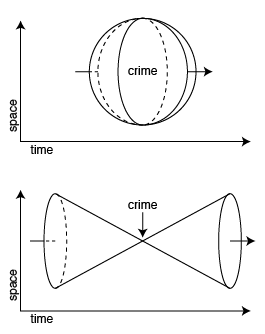

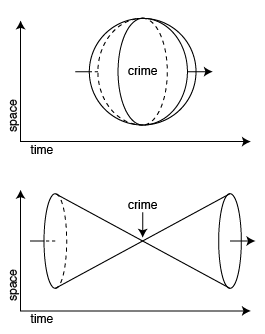

Pages 231-232, on modeling space and time:

Professor Bijker takes a metre-long plaster model of a new dam, fixes it into place and launches a first round of tides shortened to twelve minutes; then he takes it out, tries another one and continues. Sure enough, another 'Copernican revolution' has taken place. There are not that many ways to master a situation. Either you dominate it physically; or you draw on your side a great many allies; or else, you try to be there before anybody else. How can this be done? Simply by reversing the flow of time. Professor Bijker and his colleagues dominate the problem, master it more easily than the port officials who are out there in the rain and are much smaller than the landscape. Whatever may happen in the full-scall space-time, the engineers will have already seen it. They will also have become slowly acquainted with all the possibilities, rehearsing each scenario at leisure, capitalising on paper possible outcomes, which gives them years of experience more than others. The order of time and space has been completely reshuffled. Do they talk with more authority and more certainty than the workmen building the real dam there? Well, of course, since they have already made all possible blunders and mistakes, safely inside the wooden hall in Delft, consuming only plaster and a few salaries along the way, inadvertently flooding not millions of hard-working Dutch but dozens of metres of concrete floor.

Pages 248-249, where it all breaks down:

When the architects, urbanists and energeticians in charge of the Frangocastello solar village project in Crete had finished their calculations in early 1980 they had in their office, in Athens, a complete paper scale model of the village. They knew everything available about Crete: solar energy, weather patterns, local demography, water resources, economic trends, concrete structures and agriculture in greenhouses. They had rehearsed and discussed every possible configuration with the best engineers in the world and had triggered the enthusiasm of many European, American, and Greek development banks by settling on an optimal and original prototype. Like Cape Canaveral engineers the had simply to go 'out there' and apply their calculations, proving once again the quasi-supernatural power of scientists. When they sent their engineers from Athens to Frangocastello to start expropriating property and smoothing out the little details, they met with a totally unexpected 'outside'. Not only were the inhabitants not ready to abandon their lands in exchange for houses in the new village, but they were ready to fight with their rifles against what they took as a new American atomic military base camouflaged under a solar energy village. The application of the theory became harder every day as the mobilisation of opposition grew in strength, enrolling the pope and the Socialist Party. It soon became obvious that, since the army could not be sent to force Cretans to occupy willingly the future prototype, a negotiation had to start between the inside and the outside. But how could they strike a compromise between a brand new solar village and a few hundred shepherds who simply wanted three kilometres of asphalted road and a gas station? The compromise was to abandon the solar village altogether. All the planning of the energeticians was routed back inside the network and limited to a paper scale model, another one of the many projects engineers have in their drawers. The 'out-thereness' had given a fatal blow to this example of science.

Page 249, networks and a conclusion by way of prediction:

So how is it that in some cases science's predictions are fulfilled and in some other cases pitifully fail? The rule of method to apply here is rather straightforward: every time you hear about a successful application of science, look for the progressive extension of a network. Every time you hear about a failure of science, look for what part of which network has been punctured. I bet you will always find it.

Nov 11, 2007 7:54am

more like faumaxion

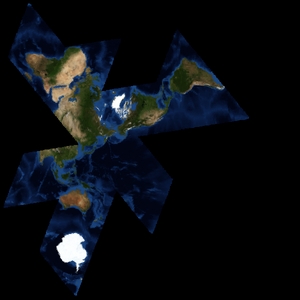

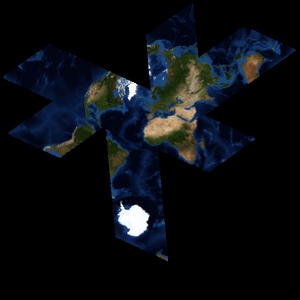

Earlier this week I posted a bungled attempt at implementing Buckminster Fuller's Dymaxion World Map. Owing to a rainy Saturday, that first pass at understanding the projection has matured into something a bit more stable and applicable.

I've been working from Robert Gray's C Implementation of the world map, but after getting it working I ended up discarding it. There are a few limitations with Fuller's projection math and Gray's implementation. I decided to stick with the icosahedron layout on the original map, but switched to the gnomonic projection, similar to Fuller's but blessed with an inverse:

... in computer applications where you "click" on a position on the flat map to get an (x,y) coordinate pair, and you have to convert this to the corresponding (longitude, latitude) coordinate pair, you would have to "loop through" Fuller's projection method several times to get an approximate answer whereas in the Gnomonic case, there is no looping, you have an exact "inverse" equation. -Robert Gray

The gnomonic does pretty much exactly the right thing, in much less space. In effect, each triangular face of the main icosahedron becomes a little projection of its own, accurate at the center and a little less-so at the edges. The general idea is that maps can be arranged about any point on the earth's surface without computationally expensive image reprojection, and with a minimum of surface tearing near the center.

Gray's example code also has just one unfolding of the map hard-coded, making it difficult to create flexible arrangements of land masses for specific needs. It's more interesting to have a version that supports a variety of layouts, like these views centered on various parts of the Atlantic:

The surface coloration I use is from NASA's Blue Marble satellite image set, something I've written about before. I'm not yet sure how to go about making the faumaxion code public, but I imagine that it may find its way into Modest Maps some time in the future.

Nov 6, 2007 8:41pm

not necessarily dymaxion

This is the first output of a raw Python port of the Fuller Dymaxion projection.

It's not quite the thing, but cool nonetheless:

Oct 29, 2007 7:08am

blog all dog-eared pages: where the suckers moon

(This is a regular series, see previous entries on Kuhn, Whyte, Buxton, Kidder, Whyte again, Levinson, Edgerton, and a recent name-check from Adam)

Where The Suckers Moon is Randall Rothenberg's account of Subaru's search for an advertising agency in the early 1990s and the campaign that resulted. It traces the strange roots of the car company, diverts into histories of the advertising industry, communications, semiotics, and psychology, and follows the creation of a campaign from its first creative development through the trenches of production and out to public release.

The first half of the book is largely historical, and doesn't provide a lot of quotable material for these excerpts. That's not to say it isn't good reading, just doesn't chunk well.

Reading this book reminded me of the blessing and curse that is YouTube. A blessing, because many of the early 1990s ads described in the narrative are readily available on Google's monster video sharing site, such as Tibor Kalman's work for Pepe Jeans. This ad has lurked in my subconscious for the past 17 years. A curse, because anything of recent interest is inevitably scrubbed from YouTube at a rapidly accelerating clip. Exhibit A is my post on the London 2012 identity I love so dearly, whose linked videos have been pulled for bullshit copyright reasons. I have a half a mind to write the minimal amount of Python and Actionscript it would take to mirror posted videos and keep them as presentable as they are now - the hive mind shared memory functions of sites like YouTube and OiNK are as deeply valuable as the communicative functions of the recorded media they store and share.

Anyway, on to the excerpts.

Page 211, on pomo:

Beyond placing emphasis in filmmaking technique, Wieden & Kennedy's Lou Reed ad helped foster the development of a postmodern sensibility in the advertising industry. In the minds of the youngsters who were entering the business, advertising no longer had to be advertising, or entertainment. It could be, in Larry Bridge's phrase, "metacommentary": art that explicated, through irony, camp, iconic references or self-reference, the commercial itself and the consumer culture of which it was a part. It was a living, evolutionary answer to Walter Benjamin's denial that art could exist in the modern era - "that which withers in the age of mechanical reproduction is the aura of the work of art."

Page 212-213, on pomo some more:

It may have looked like "metacommentary", but semioticians term it a "false metacommunication" because, through its production techniques, it pointed the viewer in a wrong direction - toward the preferred interpretation of freedom and license - in order to mask its covert purpose, selling mass-manufactured goods, which it did by the implicit linkage of the product with the message of independence. Robert Goldman and Steve Papson, sociologists who have studied this school of advertising, refer to it, with good reason, as "the postmodernism that failed."

Page 225, on conflicted creative direction:

And the truth was this: Jerry Cronin, the new creative director on Subaru of America's advertising account, despised cars. ... "I always hated cars," Jerry said one day in his office. "I didn't own a car until I was twenty-eight. We had no money when I was growing up. We always had these old Ramblers. I always heard the old man complaining about cars. Every time he left the house, he never knew whether the car would get him home." ... "People are far too attached to their cars. I want them to see that cars are a hunk of metal. Automotive advertising is the biggest lie of all time. You want to live better, look better - buy a grill, go to the gym!"

Page 230, on art direction influences:

Jerry was thinking. What he was looking for was inspiration. He had already decided that the look he wanted derived from the heroic Social Realism prevalent in public and commercial art during the 1930s - the "dawn-of-the-Machine-Age" style popularized in friezes by the Works Progress Administration and photographs in Life. That this look was also prevalent in the hortatory art of both Hilter's Germany and Stalin's Soviet Union did not escape the agency men. Larry sent his assistant to a local video store to pick up a copy of Leni Riefenstahls's Olympiad, a celebration of Nazi power, to review it for cinematographic stimulation.

Page 301, on Chait/Day and fighting clients:

Watching the agency win, and build, Apple Computer and Yamaha motorcycles and other prestigious accounts taught Luhr the essential lessons of account management in the era of postmodern advertising. To do good work was the purpose of advertising, he learned. And good creative people didn't operate by the same rules by which, say, good bankers do. And clients don't always recognize the value of good creative work or good, quirky creative people, so an account exec had to be prepared to fight the client, anger the client, even risk dismissal or fire the client if the going got too debilitating.

Page 309, on slow hiring:

Everything Wieden & Kennedy was grew out of a creative philosophy that required immersion in the convolutions of American culture, everything the agency could be depended on the collegial spirit of the men and women who filled its offices. Although hundreds of creatives at other agencies across the land would have overturned their lives for a chance to work, however briefly, at Wieden & Kennedy, Dan was not an easy mark. You can't just... just... hire people overnight! You have to talk to them, again and again and again, test them, tease them, scrutinize their work and their philosophies. Since it was difficult to schedule time with Dan (his insistence on approving everything that went on in the agency made him difficult to pin down) Wieden & Kennedy generally took months to hire even relatively junior copywriters and art directors. On the Subaru account, the delays took their toll.

Page 328, on faith:

Faith, while hard won, is easily lost. It can be shaken by many things: misguided words, obstinacy, an inability to grow along with one's partner, suddenly seeing the partner through eyes unblinded by desire. Relationships, of course, are maintained by faith. No matter how fervently contemporary ad agencies insist that they are entertainers or artists, advertising is still founded on relationships. So in advertising, as in marriage, a loss of faith can be debilitating. It is the only quality, really, that binds a client to an agency.

Page 415, on reading between the lines:

"And so this campaign really does explain the key features of the car," Walter said, "in a very simpleminded way, not unlike the way Lexus is doing it." (Features: That meant Wieden & Kennedy had learned to talk about engineering. Simpleminded: That showed the agency was not striving to be creative. Lexus: That proved the agency had learned to sell by overselling.) "It hits on something we learned in the research: Impreza considerers need to be sold." (Research: That meant the work wasn't the invention of artsy types. Sold: That spoke again to the agency's new willingness to huckster.) "It also has a new tactical element, a videotape that we'll send consumers and ask them to respond to, via toll-free number." (Tactical: That showed Wieden & Kennedy was ready to deploy gimmicks. Toll-free number: The kind of gimmicks used by the big, boring agencies in New York.)

Page 427, in conclusion:

Subaru of America had learned the lesson of advertising. Advertising did not work by entertaining or assaulting the intellect of its audience, as the company's previous agencies had believed. Nor did it work through subliminal manipulation, as so many Americans, ever on the lookout for conspiracies, misguidedly thought. Instead, advertising, as the great ad man Bruce Barton had acknowledged decades before, was "something big, something splendid, something which goes deep down into an institution and gets hold of the soul of it." To succeed, advertising cannot seek to invent a new soul. Instead, it must reinforce and redirect the existing image. It must serve as a form of mythology, providing the corporation's various and often competing constituencies - of which consumers are only one of many - heroes, villains, principles, rules of conduct and stories with which they can rally the faithful to remain true to the cause. Only then, with luck and effort, can they win new converts.

Oct 26, 2007 12:17am

animated gif theatre

Three good ones today:

Do Not Fall Down

(via Enough Of Your Borax, Poindexter)

So This Bird Walks Into A Store...

(via The Animated GIF Appreciation Society)

Animéted GIF

(also via Enough Of Your Borax, Poindexter)

Previously: Bunny Emoticons.

Oct 11, 2007 1:21am

atkinson dithering

Turns out Atkinson dithering is really easy, for that classic Mac look!

Here's a minimal Python implementation (requires PIL).

Oct 10, 2007 9:51pm

branding a fuckup

Errors in software used to just happen to one computer at a time.

Web applications mean that errors happen to lots and lots of people, all at the same time, often when they'd really rather be getting something done. Spurred on by Flickr, developers and designers have taken to adding a bit of personality to their branded error pages:

See more at: Who Has The Best Sorry Page?

"Sorry pages" are like a category of folk art unto themselves. They defuse a possibly difficult situation ("I can't get to my photos!") with humor, and help communicate expectations without overly technical jargon.

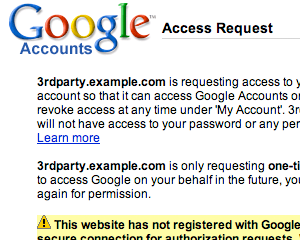

Keeping your applications on the web also means that moving your data around between apps is complicated. OAuth has jumped into the fray with a new standard for 3rd party API authentication. It's an extraction of several examples currently in the wild, and is designed to allow users of a service to grant temporary permission to a 3rd party to access their private data. It needs to be safe and revokable, and keep data consumers from having to ask users for their passwords. I've been watching this effort from a short distance, and I can honestly say that I have no desire to see politics, sausage, or technical specs get made. Especially when crypto-nerds get involved.

Read the OAuth page for an explanation of what they're on about, and what they've created.

OAuth mostly succeeds, but there's one new-to-me addition to the spec that dangerously interferes with meaningful, attributable Sorry pages like the ones illustrated above. I've written before about the niceties of Google Authsub, specifically the way it opens the door to experimentation without a pre-existing relationship with Google. OAuth has introduced a step into its specified flow that I think is a bad idea: instead of just sending the user to a service provider's authentication page, it's first necessary for the Consumer (the ones that want to access a user's data) to perform a little behind-the-scenes sleight of hand with the Provider (the app where the user's data lives) in order to juggle some keys back and forth. If this step fails, it's up to the Consumer to figure out what went wrong and report to the User that something has gone wrong, instead of letting the Provider do so themselves via their usual language / design: upside-down birds, Admiral Ackbar, massages, etc. There's a world of difference between a printer telling you "Flickr's not working" and you seeing it for yourself, and getting the comforting "massage" response that also tells you what's going on, whether it's unexpected, and when you might get to play with your toys again.

Sep 29, 2007 5:43am

remission

Even though they haven't released anything worth listening to since Dwayne Goettel died in 1995, Skinny Puppy has been a favorite band of mine since I first heard them 15 years ago.

Most of the ice-cold music I've posted here in the past has been, to my ears, directly descended from Skinny Puppy's 1984 EP, Remission. It's a perfect example of self-confidence absent the inflated expectations of a demanding fanbase, a trait also found in early Orbital records and Skinny Puppy's later albums Bites and Mind: T.P.I.

Sep 27, 2007 2:10am

whole foods

Oakland's first Whole Foods Market opened up today.

Here are some photos from when Gem and I visited in June, 2005:

Sep 24, 2007 12:53am

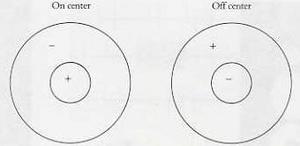

gefingerpoken

One of the core gestures in a multi-touch interface is the two-finger deforming drag, a descendent of the traditional mouse-driven drag and drop. The difference is that with two points of contact, interface elements such as windows can be moved, stretched, and turned. See what this would look like in a real interface five seconds into the big-ass table video. Implementing two-finger drag turns out to be less-than-obvious, but I've put together a short demo (see also a larger version with source code) that shows how to do it easily.

Drag the fingers and pretend they're your own:

There are two main difficulties: figuring out how precisely the two contacts should act on an object, and then translating those into the appropriate placement, sizing, and rotation of the object. We start with two rules: the object can be moved and turned, but not skewed, squashed, or otherwise deformed, and the fingers should stay in contact with the same points on the object throughout their movement.

Both troubles can be solved with the use of affine transformation matrices, the closest thing computer science has to a true, working hammer. I've described before how to derive a complete transformation from just three example points, so we need to figure out where to place a third point to complement the two fingers above. If we assume that the line between the two fingers is the hypotenuse of a right equilateral triangle, the we can guarantee a stable position for the invisible third finger by working out the two legs of the complete triangle. See it in action above when you drag.

Since version 8 or so, Flash has exposed proper matrix transformations on all clips in addition to the usual x, y, rotation, and scale. Unfortunately, the documentation leaves something to be desired, but it's possible to make Flash's Matrix class behave like it's supposed to by juggling a few of the arguments. After deriving a complete transformation from the movement of the two-finger triangle, we can apply it to the UI object and get something that moves properly.

Look out for two important functions in the source code:

- deriveThirdPoint() builds the triangle and adds a third ghost finger to the two physical ones.

- deformBox() applies the three fingers to repeatedly transform the photograph so that the fingers appear to be dragging it around the screen.

Sep 14, 2007 1:57am

blog all dog-eared pages: the structure of scientific revolutions

I first heard the term "paradigm shift" in high school (journalism camp, 1994, oh yeah). It gets used a lot these days, especially in the field of web technology, where every new web service, development framework, and business plan is a game changing paradigm shift. Curious where the term originated, I was led to Thomas S. Kuhn's The Structure of Scientific Revolutions, a 1962 essay seeking to explain changes in scientific belief over time. Kuhn's central argument is that progress does not happen by slow accretion of ideas over time, but by periods of stable work ("normal science") punctuated by crisis and rapid change ("paradigm shifts"). Crises are brought about by an accumulation of problems closed to normal scientific work, and are resolved through gestalt shifts that change research agendas and dominant theories.

The book also includes a 1969 postscript that flips the impact of the book on its head a bit. I've always seen the essay's argument as broadly applicable to other fields, but Kuhn says he developed it by applying the lessons of other fields to science.

Page 208, on applicability:

To one last reaction to this book, my answer must be of a different sort. A number of those who have taken pleasure from it have done so less because it illuminates science than because they read its main theses as applicable to many other fields as well. I see what they mean and would not like to discourage their attempts to extend the position, but their reaction has nevertheless puzzled me. To the extent that the book portrays scientific development as a succession of tradition-bounds periods punctuated by non-cumulative breaks, its theses are undoubtedly of wide applicability. But they should be, for they are borrowed from other fields. Historians of literature, of music, of the arts, of political development, and of many other human activities have long described their subjects in the same way. Periodization in terms of revolutionary breaks in style, taste, and institutional structure have been among their standard tools. If I have been original with respect to concepts like these, it has mainly been by applying them to the sciences, fields which had been widely though to develop in a different way.

On to the meat of the book...

Pages 2-3, on what is scientific:

The more carefully they study, say, Aristotelian dynamics, phlogistic chemistry, or caloric thermodynamics, the more certain they feel that those once current view of nature were, as a whole, neither less scientific nor more the product of human idiosyncrasy than those current today. ... Out-of-date theories are not in principle unscientific because they have been discarded.

Page 5, on normalcy:

Normal science, the activity in which most scientists inevitably spend most all their time, is predicated on the assumption that the scientific community knows what the world is like. Much of the success of the enterprise derives from the community's willingness to defend that assumption, if necessary at considerable cost. Normal science, for example, often suppresses fundamental novelties because they are necessarily subversive of its basic commitments. Nevertheless, so long as those commitments retain an element of the arbitrary, the very nature of normal research ensures that novelty shall not be suppressed for very long.

Page 20, on the coincidence of intelligibility and paradigm boundaries:

Both in mathematics and astronomy, research reports had ceased already in antiquity to be intelligible to a generally educated audience. In dynamics, research became similarly esoteric in the later Middle Ages, and it recaptured general intelligibility only briefly during the early seventeenth centrury when a new paradigm replaced the one that had guided medieval research. Electrical research began to require translation for the layman before the end of the eighteenth century, and most other fields of physical science ceased to be generally accessible in the nineteenth.

Page 55, on discovering:

Clearly we need a new vocabulary and concepts for analyzing events like the discovery of oxygen. Though undoubtedly correct, the sentence, "Oxygen was discovered," misleads by suggesting that discovering something is a single simple act assimilable to our usual concept of seeing. That is why we so readily assume that discovering, like seeing or touching, should be unequivocally attributable to an individual and to a moment in time. But the latter attribution is always impossible, and the former often is as well.

Page 76, on crisis and retooling paradigms:

So long as the tools a paradigm supplies continue to prove capable of solving the problems it defines, science moves fastest and penetrates most deeply through confident employment of those tools. The reason is clear. As in manufacture so in science - retooling is an extravagance to be reserved for the occasion that demands it. The significance of crises is the indication they provide that an occasion for retooling has arrived.

Page 88, on introspection during crisis:

It is no accident that the emergence of Newtonian physics in the seventeenth century and of relativity and quantum mechanics in the twentieth should have both been preceded and accompanied by fundamental philosophical analyses of the contemporary research tradition. Nor is it an accident that in both of these periods the so-called thought experiment should have played so critical a role in the progress of research. As I have shown elsewhere, the analytical thought experimentation that bulks so large in the writings of Galileo, Einstein, Bohr, and others is perfectly calculated to expose the old paradigm to existing knowledge in ways that isolate the root of crisis with a clarity unattainable in the laboratory.

Page 122, on the suddenness of paradigm shifts:

Paradigms are not corrigible by normal science at all. Instead, as we have already seen, normal science ultimately leads only to the recognition of anomalies and to crises. And these are terminated, not by deliberation and interpretation, but by a relatively sudden and unstructured event like the gestalt switch. Scientists often speak of the "scales falling from the eyes" or of the "lightning flash" that "inundates" a previously obscure puzzle, enabling its components to be seen in a new way that for the first time permits its solution.

Pages 150-151, on generational shifts:

How, then, are scientists brought to make this transposition? Part of the answer is that they are very often not. Corpernicanism made few converts for almost a century after Copernicus' death. Newton's work was not generally accepted, particularly on the Continent, for more than half a century after the Principia appeared. ... And Max Planck, surveying his own career in his Scientific Autobiography, sadly remarked that "a new scientific truth does not triumph by convincing its opponents and making them see the light, but rather because its opponents eventually die."

Page 164, on choice of problem:

Unlike the engineer, and many doctors, and most theologians, the scientist need not choose problems because they urgently need solution and without regard for the tools available to solve them. In this respect, also, the contrast between natural scientists and many social scientists proves instructive. The latter often tend, as the former almost never do, to defend their choice of a research problem - e.g. the effects of racial discrimination or the causes of a business cycle - chiefly in terms of the social importance of achieving a solution. Which group would one then expect to solve problems at a more rapid rate?

Sep 6, 2007 12:16am

algebra 2.0

Wordie: Like Flickr, but without the photos.

FFFFOUND! is a web service that ... allows the users to post and share their favorite images found on the web.

wordie.org + ffffound.com = flickr.com

wordie.org = flickr.com - ffffound.com

flickr.com - wordie.org = ffffound.com

wordie.org - flickr.com + ffffound.com = 0

Sep 3, 2007 5:41pm

dave winer on twitter

By way of followup to that last post, can I say that Dave Winer has the best Twitter background image + user icon combination ever?

Sep 2, 2007 6:16am

uselessness

As one of the people responsible for Twitter Blocks (really it was Ryan and Tom who made it - on this project, I'm "management"), it's been interesting reading feedback to the project's launch. Tom summarized a particular strain of it as Criticism For Twitter Blocks. Go, read.

...

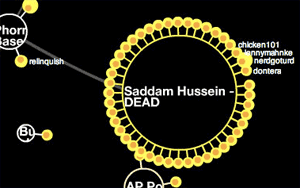

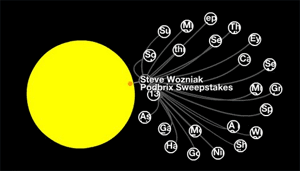

So we get this a lot: "Beautiful! But useless!". We've heard it in response to most projects we've done over the past few years (one exception has been Oakland Crimespotting, whose stock yokel response is: "no way am I moving to Oakland!").

By now, we're fairly accustomed to it. I've historically stayed mum, in the belief that this particular critique is best met with silence, because what is there to add? This current case rankles a bit, since a lot of those snarks are coming via Twitter, Pownce, and Jaiku messages. Twitter is practically the "fun, but useless, but oddly popular" poster child of the moment, so it's ironic to see people who've taken the leap to its particular brand of short-messaged-based playtime suddenly waxing utilitarian (for example, Dave Winer). Tom argues that it's worth focusing on fun once in a while instead of just utility, monetization, and features. I'm arguing that a lot of the people crowing "but useless!" have already taken that plunge, yet lack the self-awareness or humility to see it for what it is. There are plenty of but-useless things in the world that serve as emotional bonding points, amusements, attractions, and macguffins. Practically all of social media falls under this category for me, a form of mediated play that requires a suspension of disbelief in rational purpose to succeed.

There are of course legitimate reasons to find Twitter and Blocks annoying: Blocks likes teh CPU, not everyone enjoys frequent tiny updates from people, there are jerks in any social service, Blocks has a big Motorola ad next on it, and so on. Worries about Obvious Corp.'s business sustainability and freakouts that Blocks was launched (by us) despite the presence of bugs in Twitter are not legitimate reasons.

But, since we're on the topic, I'm going to suggest that Blocks is our hat in the ring for traversing the social graph. Unlike the friends views on the existing site, the only people who show up are guaranteed to be recently active, so there's no deadwood problem. Also unlike the existing friends view, we've introduced two dimensions to show a second degree of separation, leading to regular "I had no idea so-and-so was on Twitter" moments since the first experimental layouts were done and presented one month ago. Also, it doesn't look like some suck-ass sticks-and-rocks graph.

Sufficiently useful?

Aug 31, 2007 12:51am

digg arc history

I just posted a visual diary of Digg Arc development to the Stamen blog. Go check it out, and relive the glory...

Aug 29, 2007 1:53am

minilogue

Two Minilogue tracks doing it for me today:

- Inca (26.9MB)

- Certain Things About Us, Part 1 (15.2MB)

This is also not half bad:

- Audion: Fire (14.9MB)

Aug 21, 2007 6:53am

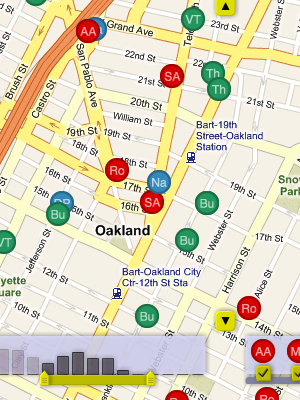

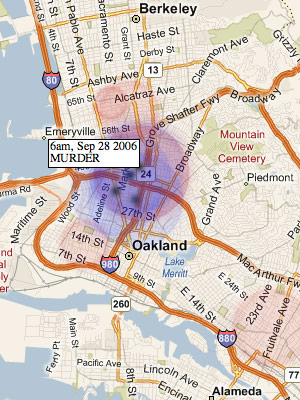

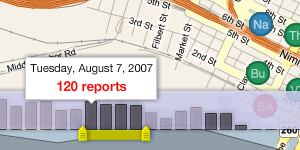

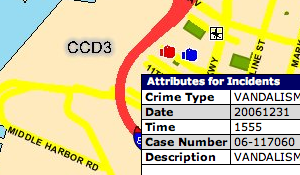

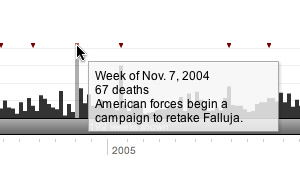

oakland crime maps IX: post-launch

Last week, we launched Oakland Crimespotting, capping off eight months of the occasional data sketching I've been recording on this site. I've covered a few speculative topics here that didn't graduate to the public version of the site, and there have been a number of interesting new things that were sure to add.

The initial work on scraping (post I, post II) is still in use. Thankfully, the city hasn't changed CrimeWatch much since December, so our nightly collection runs are still chugging along happily. We do four collections every evening: past four days, and then individual days a week, two weeks, and one month in the past. The overlap is because we've noticed that the Oakland PD amends and modifies crime reports, and the whole map site is frequently down altogether.

Two later pieces (post III, post IV) introduced an idea on time-based display, but ultimately it was effective to just drop in the dots and add live draggy/zoomy controls. This is something we've consistently found with other projects, too: it's so often the case that the "right" design is not the technically complicated one, but the one that gets feedback and interactivity just so.

Finally, I wrote up a few pieces (post VI, post VII) on public data indexing. This is something I continue to find interesting, but at the volume of traffic we're pushing, it's totally unnecessary. Turns out MySQL is kind of awesome at this sort of thing.

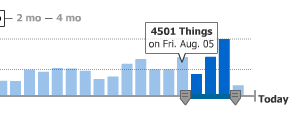

There are two big features on the map interface that only emerged when designing and developing it with Tom and Eric. The date slider is something that we shamelessly nicked from Measure Map, though we added the bit where per-day columns act as a display showing which data has been loaded. This part is still under active development. The idea is that the background should be draggable, to allow people to navigate back further in time than 30 days.

Measure Map:

Ours:

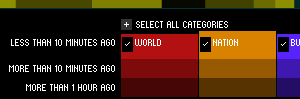

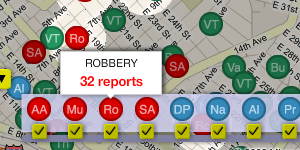

The second is the crime type picker, an interface whose affordances we borrowed from Newsmap. This one's quite simple, but it does trigger the visual spotlight effect that makes it possible to pick out crimes of a certain type throughout the map.

Newsmap:

Ours:

It was important that every view of the map be linkable and sharable, so we imported a number of ideas that Tom developed for our last map project, Trulia Hindsight. The thing to watch for is how the URL of the page you're looking at changes as you pan and zoom around. It can be copied, shared in an e-mail, sent over IM to a friend, and posted in a blog.

An "official" API has not been described or announced, but it will most likely include the site's Atom / GeoRSS feeds. These implement a small subset of the OpenSearch request specification:

- bbox is a geographical bounding box in the order west, south, east, north.

- dtstart and dtend are start and end dates, in YYYY-MM-DDTHH:MM:SSZ format.

Look for these hanging off of the /crime-data endpoint.

The site is hosted on Amazon's EC2 service, on a 10 cent/hour virtual server running Debian Linux, MySQL, Apache, and PHP. The static maps are generated by Aaron Cope's recent addition to Modest Maps, ws-compose.py. It's a BaseHTTPServer that stitches tiles into map PNG's, and I've been running four of them (and caching the responses) for the past week with no troubles.

I've rediscovered the joys of procedural PHP4 with this project. EC2 has proven to be a real champ, allowing us to set up a test machine, deploy a living site, but always holding out the possibility of migration to a "real" server. At a total of $80/month, the virtual Debian machine may last for a while.

Next steps may include San Francisco and Berkeley.

Aug 15, 2007 11:16pm

oakland crime maps VIII: first public launch

I promised we'd have something to show, right? In response to the red wave of homicides that swept Oakland two weeks ago, Tom and I published a visual map of crime reports in Oakland.

I'll write more later, but for now go and explore.

Aug 10, 2007 4:31pm

ffffound! updated

FFFFOUND! got some updates that seem to answer two of my comments from last week. I now know who my followers are, and whose images I consistently like. Something interesting I pay attention to when browsing the site: because it's Japanese there are a lot of obviously-Japanese usernames around. The list of my followers seems to be largely "western" names. I'm curious if a closer look at the site would reveal any clear difference in tastes between the U.S./Euro and Asian user populations? I do tend to post a lot of characteristically western images. I also consider it a feature that the site is so image-focused that there's not generally very much text around.

Aug 5, 2007 7:04pm

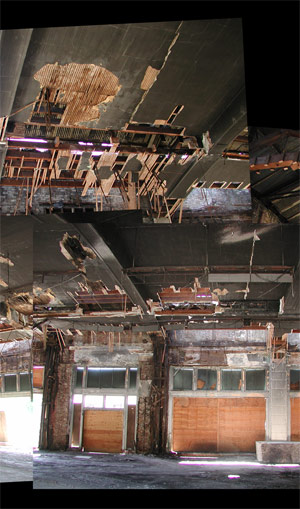

blog all dog-eared pages: the social life of small urban spaces

The Social Life of Small Urban Spaces is a brief book on urban plaza design from William Whyte, author of The Organization Man. Here, Whyte focuses on behavior in urban plazas of the sort generally found at the foot of corporate skyscrapers in New York, San Francisco, and other major cities. Various building ordinances and rules require that developers include such plazas in their design, but they are wind-blown and vacant as often as not. This book is the summary of an ethnographic study of these spaces, based on extensive filming of use patterns in 1980.

As with Organization Man, Whyte is a sharp observer and pithy commentator.

Social Life focuses on several topics in turn: crowds (and why people like them), sitting space, fountains, bums, food vendors, and filming techniques for study. The book argues that architects and developers imbue their developments with an emotional tenor that people instinctively pick up on, and shows how it affects use and health. There's a lot here that's directly applicable to social systems on the web, such as passages on distrustful design and "megastructures", the car-focused, inward-facing malls and complexes popular in cities over the past few decades. Whyte isn't optimistic about their future, and I think there are lessons to be learned by inward-facing online destinations such as Facebook.

In the same vein, Wired editor Chris Anderson recently gave up on Second Life because "if you're going to evoke real world conceits such as "places" that you "go to", then you've got to deal with real world expectations of those places. We don't like like empty buildings in RL; why should be more tolerant of them in SL just because there are traces of those who have been there before?"

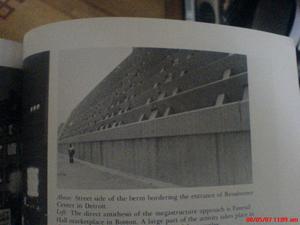

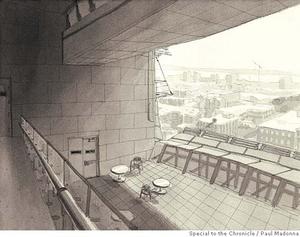

Overall, Whyte's focus on describing use shows how optimistic architectural renderings break down in the face of natural human use patterns. I especially enjoyed the contrast between Whyte's choice of images for an example megastructure, the Detroit Renaissance Center. Here's an image typical of Google's image results for the center:

Here's Whyte's street-level view:

Bleak.

It's a hopeful book, though - no problem seems insurmountable in the face of realistic expectations and a hot dog stand or two.

Page 19, on crowds:

What attracts people most, it would appear, is other people. If I belabor the point, it is because many urban spaces are being designed as though the opposite were true, and that what people liked best were the places they stay away from. People often do talk along such lines; this is why their responses to questionnaires can be so misleading. How many people would say they like to sit in the middle of a crowd? Instead, they speak of getting away from it all, and use words like "escape", "oasis", "retreat". What people do, however, reveals a different priority.

Page 23, on similarity:

The strongest similarities are found among the world's largest cities. People in them tend to behave more like their counterparts in other world cities than like fellow nationals in smaller cities. Big-city people walk faster, for one thing, and they self-congest. ... Modest conclusion: given the basic elements of a center city - such as high pedestrian volumes, and concentrations and mixtures of activities - people in one place tend to act much like people in another.

Page 33, on benches:

Benches are artifacts the purpose of which is to punctuate architectural photographs. They're not so good for sitting. There are too few of them; they are too small; they are often isolated from other benches or from whatever action there is on the plaza. Worse yet, architects tend to repeat the same module in plaza after plaza, unaware that it didn't work very well in the first place.

Page 35, on chairs:

The possibility of choice is as important as the exercise of it. If you know you can move if you want to, you feel more comfortable staying put. This is why, perhaps, people so often move a chair a few inches this way and that before sitting in it, with the chair ending up about where it was in the first place. The moves are functional, however. They are a declaration of autonomy, to oneself, and rather satisfying.

Page 61, on distrust and "undesirables":

Many corporation executives who make the key decisions about the city have surprisingly little acquaintance with the life of its streets and open spaces. ... To them, the unknown city is a place of danger. If their building has a plaza, it is likely to be a defensive one that they will rarely use themselves. Few others will either. Places designed with distrust get what they were looking for and it is in them, ironically, that you will most likely find a wino.

Page 64, on guards and plaza mayors:

...it is characteristic of well-used places to have a "mayor". He may be a building guard, a newsstand operator, or a food vendor. Watch him, and you'll notice people checking in during the day. ... One of the best mayors I've seen is Joe Hardy of the Exxon Building. He is an actor, as well as the building guard, and was originally hired by Rockefeller Center Inc. to play Santa Claus, whom he resembles. Ordinarily, guards are not supposed to initiate conversations, but Joe Hardy is gregarious and curious and has a nice sense of situations. ... Joe is quite tolerant of winos and odd people, as long as they don't bother anybody. He is very quick to spot real trouble, however.

Page 85, on megastructures:

The ultimate development in the flight from the street is the urban fortress. In the form of megastructures more and more of these things are being put up - huge, multipurpose complexes combining offices, hotels, and shops - such as Detroit's Renaissance Center, Atlanta's Omni International. Their distinguishing characteristic is self-containment. While they are supposed to be the salvation of downtown, they are often some distance from the center of downtown, and in any event tend to be quite independent of their surroundings, which are most usually parking lots. The megastructures are wholly internalized environments, with their own life-support systems. Their enclosing walls are blank, windowless, and to the street they turn an almost solid face of concrete or brick.

Page 89, on the inevitable decline of megastructures:

And it is going to date very badly. Forms of transportation and their attendant cultures have historically produced their most elaborate manifestations just after they have entered the period of their obsolescence. So it may be with megastructures and the freeway era that bred them. They are the last convulsive embodiment of a time passing, and they are a wretched model for the future of the city.

Appendix A is a guide to using film in research.

Page 103, on research fatigue:

But, thanks to the long term time span of our study, we got our second wind and finally learned a few simple but important lessons. The crux is evaluation. Taking the film is easy. So is showing it. It's even fun. But when you start figuring out, frame by frame, what the film has to tell, and what it means, you will find the process can be enormously time consuming, and before long, tedious. That's where it all breaks down. Unless you master this phase, you will not stay the course. Happily, there are ways to shortcut the tedium, greatly speed up evaluation, and in the process make it more accurate.

Page 109, on being careful:

The real danger comes in photographing illicit activities, especially when you do it without realizing it. Our narrowest call was when we set up a perch on the fourth floor of a building in the middle of a block on 101st Street. The object was to observe the social life on the stoops and fire escapes. Before long, Cadillacs with out-of-state license plates began stopping in front of the building opposite, and there was considerable movement in and out of the basement door. A wholesale heroin operation was under way. ... At length it was discerned that a look-out with binoculars was alerting people to the impending arrival of police cars. The, one day, we saw on the film that the binoculars were trained directly on our camera. We withdrew.

Aug 1, 2007 12:24am

blog all dog-eared pages: sketching user experiences

Sketching User Experiences is Bill Buxton's new book arguing that the process of sketching is distinct from prototyping, and an integral part of design. Buxton opens with the canonical example of great design, Apple's iPod, to show that its "overnight" success actually came after 3+ years of development and updates, and moves on to talk about the lack of design in typical software organizations. These two topics are slightly out-of-tune with the remainder of the book, but I believe they were included to bridge the main thesis to Buxton's role as a Microsoft researcher. In particular, I like the argument for introducing an explicit design phase to the world of software development in accordance with Fred Brooks' opinion that mistakes caught early are mistakes fixed cheaply.

About 1/3rd through the book (see page 111, below), Buxton cuts to the chase with an 11-point definition of sketching as distinct from prototyping. Most importantly to Buxton, sketches are fast, cheap, and divergent. They develop quickly with only minimal detail to make a point, and are intended to communicate the essential ideas of a maximally-wide variety of design possibilities.

He also calls out the example of IDEO's Tech Box, a curated company library of technological toys and materials enabling rapid exploration and research in product design. This was the book's most explicit tie to Mike Kuniavsky's Sketching In Hardware conference series, demonstrating how the characteristics of a good sketch transcend pencil and paper.

Page 36, on wooden maps:

These are 3D wooden maps carved by the Ammassalik of east Greenland. The larger one shows the coastline, including fjords, mountains, and places where one can portage and land a kayak. The thinner lower maps represents a sequence of offshore islands. Such maps can be used inside mittens, thereby keeping the hands warm; they float if they fall in the water; they will withstand a 10 metre drop test; and there is no battery to go dead at a crucial moment.

Page 69, on the difficulty of making new things:

It suddenly occurred to me that our company was not alone in this situation. Rather, as far as I could make out, virtually ever other software company was pretty much in the same boat. After establishing their initial product, they were as bad as it as we were. When new products did come from in-house development, they were generally the result of some bandit "skunk works" rather than some formal sanctioned process (not a comforting thought if you are a shareholder or an employee). Across the board, the norm was that most new products came into being through mergers or acquisitions.

Page 73, on why:

My belief is that one of the most significant reasons for the failure of organizations to develop new software products in-house is the absence of anything that a design professional would recognize as an explicit design process. Based on my experience, here is how things work today. Someone decides that the company needs a new product that will do "X". An engineering team is then assigned to start building it. ... The only good thing about this approach is that one will never be accused of not conforming to the original design. The bad news is that this is because there is no initial design worthy of the term.

Page 76, on a suggested product development process:

Page 90, on the Trek Y-Bike:

The engineering prototype shown in Figure 28 works. If you look at the photo carefully, you will see that if you added pedals, sprockets, wheels, a chain, brakes, and handle-bars to this prototype it would be perfectly functional. You could ride it. ...it is almost certain that it would be a commercial flop. Why? Anyone can see that the bike is not complete. Not because of the missing parts, but because the design is not complete. What is obvious here with mountain bikes is not obvious with software. My impression is that what we see in Figure 28 relfects the state in which software products ship. They kind of work, but are as far from complete as this version of the bike is.

Page 108-109, some example sketches of bicycles showing speed and disposability:

Page 111, on a definition of sketching:

Quick: A sketch is quick to make, or at least gives that impression.

Timely: A sketch can be provided when needed.

Inexpensive: A sketch is cheap. Cost must not inhibit the ability to explore a concept, especially early in the design process.

Disposable: If you can't afford to throw it away when done, it is probably not a sketch. The investment with a sketch is in the concept, not the execution. By the way, this does not mean that they have no value, or that you always dispose of them. Rather, their value depends largely on their disposability.

Plentiful: Sketches tend not to exist in isolation. Their meaning or relevance is generally in the context of a collection or series, not an isolated rendering.

Clear vocabulary: The style in which a sketch is rendered follows certain conventions that distinguish it from other types of renderings. The style, or form, signals that it is a sketch. The way that lines extend through endpoints is an example of such a convention, or style.

Distinct gesture: There is fluidity to sketches that gives them a sense of openness and freedom. They are not tight and precise, in the sense that an engineering drawing would be, for example.

Minimal detail: Include only what is required to render the intended purpose or concept. Lawson (1997, p.242) puts it this way: "... it is usually helpful if the drawing does not show or suggest answers to questions which are not being asked at the time." Superfluous detail is almost always distracting, at best, no matter how attractive or well rendered. Going beyond "good enough" is a negative, not a positive.

Appropriate degree of refinement: By its resolution or style, a sketch should not suggest a level of refinement beyond that of the project being depicted. As Lawson expresses it, "... it seems helpful if the drawing suggests only a level of precision which corresponds to the level of certainty in the designer's mind at the time."

Suggest and explore rather than confirm: More on this later, but sketches don't "tell," they "suggest." Their value lies not in the artifact of the sketch itself, but in its ability to provide a catalyst to the desired and appropriate behaviors, conversations, and interactions.

Ambiguity: Sketches are intentionally ambiguous, and much of their value derives from their being able to be interpreted in different ways, and new relationships seen within them, even by the person who drew them.

Page 169, on the IDEO Tech Box:

It consists of hundreds of gadgets. Most are laid out on open shelf-like drawers. Some are toys, and are just there because they are clever, fun, or embody some other characteristic that may inspire, amuse, or inform (or perhaps all three). Others might be samples of materials that could be useful or relevant to future designs. ... Since the Tech Box is a kind of mini library or musem, it has someone from the studio who functions as its "curator" or "librarian." And like conventional libraries, all of the objects in the collection are tagged and catalogued so that supplementary information can be found on the studio's internal website. As an indication of how much store the company puts in having its employees have a shared set of references, there is a Tech Box in every one of their studios worldwide. Furthermore, even though anyone can add things to the collection, if you do, you must get one for the Tech Box in every on of the studios. These are circulated to the other studios by the local curator, who also makes sure that the appropriate entry is made into the associated web database.

Page 215, on the unevenly-distributed future:

Here we see the same thing. The period from concept to product is about 20 years in the industry in general, and in user interface technologies, specifically. So much for fast-changing technology! ... If history is any indication, we should assume that any technology that is going to have a significant impact over the next 10 years is already 10 years old!

Pages 350-351, on video sketches of matter duplication:

Page 413, on iconoclasm:

If you are going to break something, including a tradition, the more you understand it, the better job you can do. The same is true in classical art and design education. There are classes such as printmaking, life drawing, and water colour, whose purpose is to lay a solid foundation in technique. This underlies the complementary set of classes that focus on the content of the work - the art rather than the technique.

Page 418, in closing:

Like the word "mathematics," I think the word "future" should be pluralized, as in "futures." As long as it is singular, there is a bias toward thinking that there is only one future. That takes the emphasis, and attendant responsibilities, away from the reality that there are many possible futures, and that it is our own decisions that will determine which one we end up with.

Jul 30, 2007 6:05am

ffffound!

A lot of the links in my snippets feed are visual, but I only post a small portion of the images I encounter. Even then, context is a bitch. All of it gets fed into my Del.icio.us account, which is Flickr-aware but not otherwise picture-friendly. So, I was really happy to find FFFFOUND! last week, thanks to Lydia for the invite.

FFFFOUND! is a website for collecting and sharing images from the web, like Flickr for other people's pictures:

FFFFOUND! is a web service that not only allows the users to post and share their favorite images found on the web, but also dynamically recommends each user's tastes and interests for an inspirational image-bookmarking experience!!

I've been using it for the past week or so, and have really been enjoying the experience. It fills a niche that my other micro-bloggy services, Twitter, Pownce, and Reblog, can't. It also has some interesting borderline social features thrown in to boot.

First, the good:

The site provides a bookmarklet for importing images. The expectation is that you casually throw interesting images over to your account as you move about the web. Activating the bookmarklet adds a heavy yellow border to all page images, so that clicking on them imports them to FFFFOUND!. The source URL is sent along as well, to maintain the connection back to the original location.

It's possible to add other people's images on FFFFOUND! as well, by clicking the "I (heart) This!" button below each site image.

Recommendations branch from each image, via a collection of related thumbnails. Browsing the site is a many-tabbed experience, and I routinely follow a thread of interesting pictures every time I visit. There's a healthy population of users here with excellent taste, most of them Japanese. Images range from Processing screen grabs, to fashion photography, to architecture, to excerpts from graphic design portfolio websites. There's a heavy emphasis on inspiration among the pictures I've browsed. There are also personal recommendations behind the "New For You!" link in the navigation. Both seem to work just like your basic Amazon "people who liked this also liked..." feature.

The site has no tags, which makes me happy. All connections and content are purely visual.

The site also has a decent respect for animated GIFs, even in thumbnail and preview form.

There are a few bits that need work.

The only way to pull new images into the site is via the bookmarklet. I've found this limiting in two cases: many excellent images live on the web in thumbnail form, with links to full-size versions invisible to FFFFOUND!. Also, I spend a lot of time on an old computer with a slow-javascript-performance browser, and I'm aggressive with turning off JS and Ajax on many sites. The bookmarklet doesn't work on Flickr and certain other sites unless I make a special point of temporarily enabling javascript. This inhibits the flow.

The site's "followers" feature informs me that I have 8 followers, but it doesn't say who they are. I assume these are people who like the same images as me and find them after I do, but I can't see their identities to understand what it is they find interesting. It also doesn't tell me whose follower I am, so I can see whose tastes I tend to share. This part may actually be a feature, keeping a focus on the pictures instead of the users.

There's no way to deny recommendations. You can either love an image, or mark it as inappropriate, but you can't politely decline. This decreases the value of the recommendations feature, making it necessary to wait for uninteresting stuff to scroll off the bottom.

The site is in private beta, and each new user gets a single invitation to pass on. Mine's already accounted for, but it sure would be nice to see a more ambitious invite policy.

They offer a screensaver built with ScreenTime, but it totally crashes my shit and generally doesn't work.

Overall, though, FFFFOUND! is a joy to use. I've been introduced to a steady stream of beautiful work, and the "followers" count is a tiny nudge of positive social feedback. I love seeing the images that inspire me framed on a gallery wall like this. The domain is just a few months old, and the site seems to be in a sweet spot of growth, with quality users posting beautiful pictures, and not a lot of noise. It's interesting joining a service where I don't know anyone (yet).

The site is cagey about its source, but the WHOIS lookup says it's a project of Yugo Nakamura, designer of the freakishly awesome Uniqlock and this ball dropping thing that's become something of a joke around the office for its frequent appearance in conversation.

Jul 20, 2007 7:06am

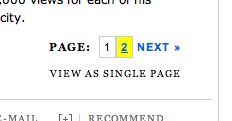

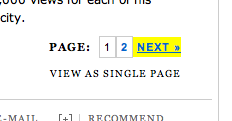

slate's page navigation

These made me very happy:

They're the page navigation links at the bottom of Slate's multi-page stories, and each image shows how the set of links looks when the mouse if hovering on the 1, the 2, and the NEXT, respectively. I'm very much enjoying the fact that the yellow highlight on the rightmost link matches the vertical height of the other two, making the whole block a tightly coupled unit.

Jul 14, 2007 8:10pm

modest map tutorial

Heads up, new Modest Maps tutorial featuring Zoomify and AC Transit.

Jul 8, 2007 7:13pm

federal building

The new San Francisco federal building opens tomorrow, and there are a few details I like very much.

There's a sky garden on the 11th floor that overlooks SOMA, and is open to the public behind a security checkpoint:

The exterior has a nice, jagged look to it, with lots of energy-saving skin features:

The elevators only stop on every 3rd floor, "to improve worker health by nudging them to use stairways - and also create crossroads where employees run onto each other, since each three-story segment includes a lobby with art and a viewing platform."

Jul 8, 2007 6:52pm

transit data

I've been collecting Bay Area public transit schedules from 511.org. I have loose plans for them, but it's going to be awhile before I get around to doing anything.

Meanwhile, here's the data: transit.db.gz, 6.4MB compressed SQLite 3 database, ~84MB uncompressed. Contains all stops for SF MUNI, AC Transit, AC Transit transbay service, and BART, in the following format: